White Paper: Blueprint for AI Powered Enterprise Fraud Detection Solutions using Model Context Protocol (MCP), LangChain, and Generative Agents

AI Use Cases in Banking

For people who don’t want to read a long article, a podcast version of the whitepaper is available here (or Copy + past this URL: https://kasrangan.substack.com/publish/post/166448243)

1. Introduction

Fraud threats in banking and financial services are evolving faster than ever, demanding real-time, context-sensitive responses. Legacy fraud systems, built on static rules and isolated data sources, often fail to detect complex threats and suffer from high false positives, slow investigations, and privacy blind spots.

This white paper presents a comprehensive blueprint for modernizing enterprise fraud detection using cutting-edge technologies, such as:

Model Context Protocol (MCP) for unified data context

LangChain for reasoning and memory-based orchestration

GenAI Agent Frameworks (such as Langraph & n8n) for Intelligent Decision-Making and workflows

The resulting system enables scalable, adaptive, and compliant fraud management across borders and channels.

This whitepaper outlines how an integrated, context-aware architecture can overcome the limitations of traditional fraud solutions through context driven integrations, stronger compliance, real-time reasoning and intelligent workflows.

2. Why Traditional Fraud Solutions Fall Short

Legacy fraud detection systems have several limitations, the key ones being:

Operate in silos, unable to integrate cross-platform data

Use hard-coded rules, failing to detect emerging fraud patterns

Deliver high false positives, overwhelming analysts

Are non-compliant by design (For example: They are unable to dynamically adjust for regional privacy laws)

Lack the ability to reason with contextual or historical insights

Rigid workflow mechanisms

Static Reporting

This new architecture could solve these challenges by embedding context-aware, privacy-respecting AI workflows into every layer of fraud detection.

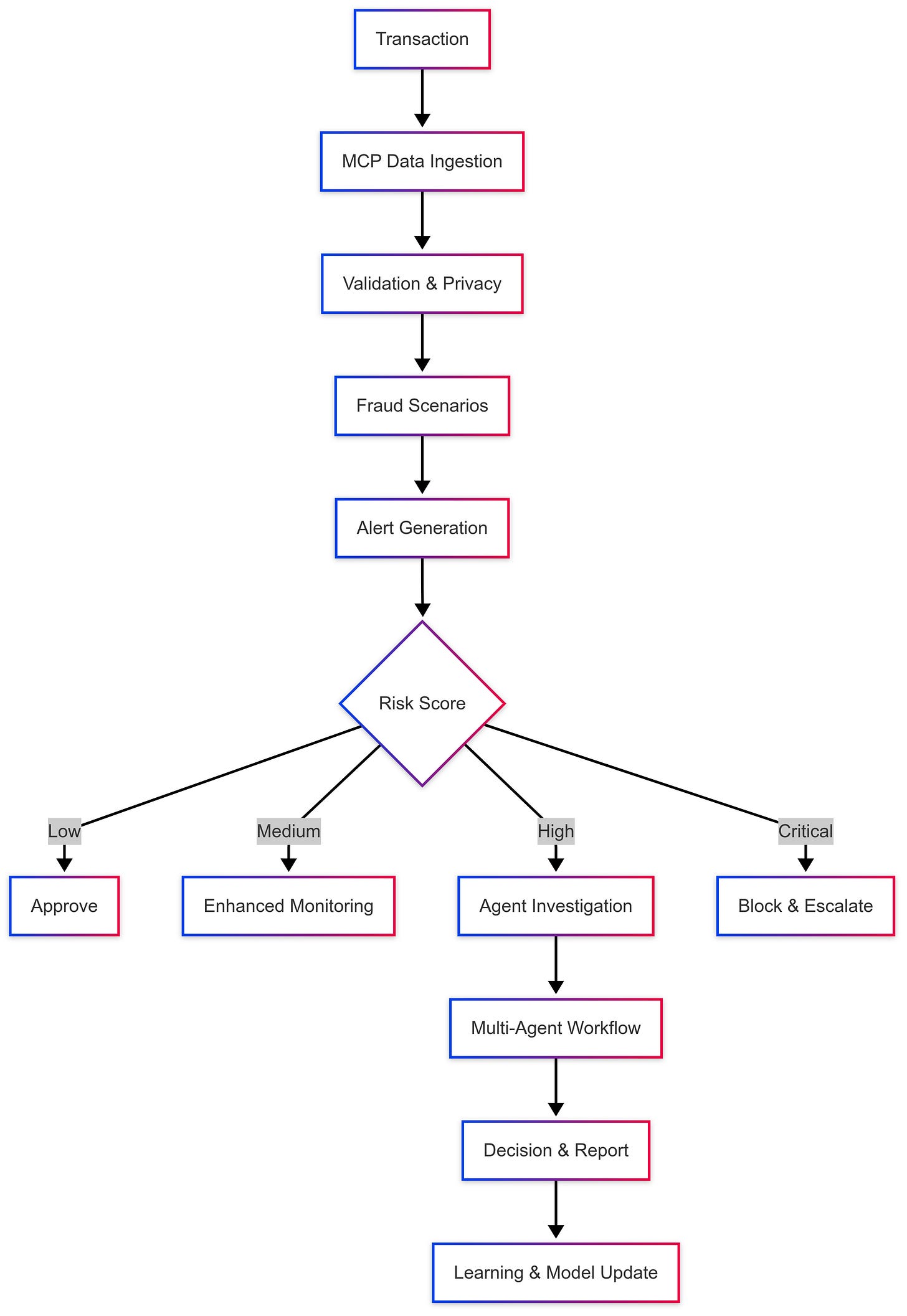

3. Proposed Architecture for Gen AI-based Integrated Fraud Detection

To overcome the above limitations, the proposed architecture unifies multiple AI components, such as:

MCP: Context-aware protocol for standardized data ingestion

LangChain: Orchestrates logic with memory, validation, and decision layers

Agents: Handle specialized tasks like triage, remediation, verification, and reporting

Conceptual Diagram of AI-Powered Fraud Monitoring Stack

4. Data Integration via Model Context Protocol (MCP)

4.1 What is Model Context Protocol

Model Context Protocol (MCP) is an open-source protocol, developed by Anthropic, that enables AI models to securely connect to and interact with external data sources and tools through standardized interfaces.

In the context of fraud risk monitoring, MCP can serve as a bridge between AI based fraud risk detection models and various data systems, such as Core Banking, Loan Origination/Management Systems, and External Data Sources.

Specifically, MCP would allow AI models to:

Access Multiple Data Sources: Connect to databases, APIs, real-time transaction streams, customer databases, and third-party fraud intelligence feeds through standardized connectors, eliminating the need for custom integrations for each data source.

Real-time Data Integration: Pull live transaction data, account information, and behavioral patterns as they occur, enabling immediate fraud detection rather than batch processing delays.

Secure Data Handling: Maintain data privacy and security through controlled access protocols, ensuring sensitive financial and personal information remains protected while still being accessible to fraud detection models.

Tool Integration: Connect fraud detection models to response systems like transaction blocking, alert generation, or case management platforms, creating an end-to-end automated fraud prevention pipeline.

Contextual Enrichment: Allow models to gather additional context about transactions, users, or patterns from multiple sources simultaneously, improving detection accuracy by providing a more complete picture of potentially fraudulent activity.

4.2 Multi-Source Ingestion with MCP

As discussed in Para 4.1, traditional systems struggle with fragmented data pipelines. With MCP, all data—real-time or batch—can securely ingest data from diverse sources, such as:

Core Banking Systems: Transactions, Accounts, Customer info

Payment Networks: Authorization logs, Merchant Data

Regulatory Feeds: OFAC sanctions, PEP watchlists

External Services: Behavioral Biometrics, Device Intelligence

4.3 Metadata via MCP

MCP ensures each source follows a standard protocol, enabling plug-and-play integration with guaranteed metadata context.

For example, each record can be tagged with:

Source identifier

Region specific regulatory jurisdiction (e.g., GDPR for Europe)

Channel context (Web/Mobile/API)

Customer risk segment

4.4 Illustrative JSON under MCP

Privacy Controls: If region_jurisdiction =

GDPR_EU, the solutions automatically apply data masking / residency constraints.Contextual Processing: Agent role =

Pattern_Analysis_Agent— allows only tokenized PII.Scenario Tuning: Customer risk segment =

HighRisk_International— triggers enhanced fraud scenario chains for cross-border flows.Channel-specific logic: Channel context =

MobileApp— invokes mobile behavior analysis (e.g., screen scraping detection).Geo-IP and Device Fingerprint Checks: Available for velocity & behavioral scenarios.

Auditability: Data lineage and transformation history are embedded for traceability and compliance audits.

JSON

{

"transaction_id": "TXN987654321",

"timestamp": "2025-06-15T10:42:23Z",

"amount": 15000.00,

"currency": "USD",

"origin_account": "ACC123456789",

"destination_account": "ACC987654321",

"merchant_category": "Cross-border Payments",

"channel": "MobileApp",

"mcp_metadata": {

"source_system": "core_banking_system_v3",

"region_jurisdiction": "GDPR_EU",

"customer_region": "DE", // Germany

"customer_risk_segment": "HighRisk_International",

"channel_context": "MobileApp",

"consent_status": "Active",

"privacy_policy_version": "GDPR-v4.3",

"device_id": "device-fp-234987abc",

"ip_address": "185.23.45.89",

"ip_reputation": "MediumRisk",

"geo_ip_location": {

"country": "Germany",

"city": "Berlin",

"lat": 52.5200,

"lon": 13.4050

},

"agent_role_context": {

"current_processing_agent": "Pattern_Analysis_Agent",

"allowed_data_access_level": "Tokenized_PII"

},

"data_lineage": {

"ingested_timestamp": "2025-06-15T10:42:25Z",

"source_pipeline_version": "v5.1",

"last_transformation_stage": "geo-risk-enrichment-stage"

}

}

}

To summarize: The protocol can standardize how fraud detection AI systems communicate with the complex ecosystem of financial data sources, making it easier to deploy sophisticated fraud monitoring across different institutions and data environments without extensive custom development work.

5. Langchain-based Data Quality Assessment, Validation, and Remediation

5.1 What is LangChain

LangChain is an open-source framework that helps developers build powerful applications using large language models (LLMs) like GPT or Claude.

It provides tools to connect LLMs with external data sources (databases, APIs, documents) and to create multi-step workflows or "chains" of reasoning.

5.2 Applications of Langchain in Fraud Risk Management

Illustratively, Langchain can support in the following manner:

Format Validation

LangChain can validate if data fields like dates, account numbers, or phone numbers match expected formats using regex, schema validators, or prompt-based checks.Referential Integrity Checks

Agents can query databases or vector stores to confirm that referenced entities (e.g., customer IDs in a transaction) exist in the source system.Business Rule Consistency

Domain-specific rules—such as “a loan cannot be disbursed before it is approved”—can be encoded using conditional prompt logic or custom tools to ensure data flows comply with internal policies.Geo-IP Coherence

LangChain can integrate with IP geolocation APIs and evaluate whether a user’s login IP aligns with their declared location or past behavior, flagging geographic inconsistencies.Duplicate Detection

By using embedding-based similarity search (e.g., with FAISS or Chroma), LangChain agents can detect near-duplicate transactions—even when descriptions or formats differ slightly—enhancing the system’s ability to catch subtle fraud.Orchestrated Workflow

These validation steps can be chained into a cohesive, explainable pipeline where each step feeds into a central Validator Agent that returns pass/fail decisions with traceable justifications

5.3 Key Benefits of Using LangChain for Data Validation (vs. Traditional DB Validations)

1. Intelligent Contextual Validation

LangChain can leverage LLMs to reason through ambiguous or fuzzy data, such as "John D." vs "John David" or "10k paid to XYZ" vs "INR 10,000 transferred to XYZ Corp."

Traditional DB validation relies on strict rules—e.g., exact matches, schema constraints—often missing edge cases that require human-like interpretation.

2. Multi-source, Multi-modal Validation

LangChain allows orchestrating validation logic that spans multiple data sources—APIs, vector stores, unstructured PDFs, or logs.

DB validations are limited to what is present in the structured schema, usually within a single RDBMS.

3. Flexible Business Rule Encoding

LangChain can dynamically evaluate complex, conditional business rules using prompt templates or agent logic.

Traditional approaches require hard-coded SQL logic or stored procedures, which are inflexible and harder to maintain as rules evolve.

4. External API Integration

LangChain agents can call external APIs (e.g., Geo-IP lookups, blacklist checks, identity validation) during runtime and use the results to validate records.

Database engines cannot natively make intelligent API calls or handle asynchronous validation pipelines.

5. Explainability & Human-like Reasoning

LangChain enables the generation of natural language explanations for why data passed or failed a check—ideal for audit trails and regulator-facing systems.

DB validations provide binary outcomes (pass/fail) with no narrative context.

6. Handling Unstructured & Semi-structured Data

LangChain works seamlessly with documents, emails, voice transcripts, logs, and other forms of unstructured data.

Traditional DB validations operate on structured tables only, and can’t reason over narrative or free-text data.

7. Adaptive & Learning Capabilities

LLMs within LangChain can adapt to changing patterns (e.g., new fraud tactics, evolving language in transactions).

DB validations are static and need manual updates for rule changes.

To summarize: Langchain allows a flexible yet stronger mechanism for validating various forms of data, especially unstructured data.

6. MCP Powered Privacy & Regulatory Compliance

6.1 How MCP Enables Privacy & Regulatory Compliance

One of the critical shortcomings of legacy fraud detection systems is that privacy controls and regulatory logic are often applied in a fragmented and manual way, mostly after the detection layer. This leads to:

Overexposure of sensitive data

Inconsistent application of privacy rules

Increased risk of non-compliance with GDPR, CCPA, and regional regulations

MCP (Model Context Protocol) solves this by embedding contextual metadata about agent roles, user jurisdiction, consent status, and data sensitivity into each data payload.

This allows every component in the fraud detection pipeline—whether an AI agent, an LLM chain, or a report generator—to make informed, automated privacy decisions before using or displaying the data.

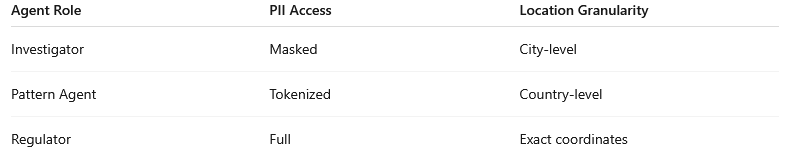

6.1 Dynamic Field-Level Masking thru MCP

MCP ensures that when data is shared with agents (such as Investigators, Pattern Agents, or Compliance Officers), the payload includes metadata flags, for example:

Illustrative Privacy Settings

"agent_role": "Investigator",

"customer_jurisdiction": "EU",

"consent_status": "active",

"privacy_policy_version": "GDPR-v4.2"

This allows the system to apply field-level masking dynamically, such as:

Consequently, an LLM or Agent does not need to hard-code privacy logic; it reads the MCP metadata and automatically applies the masking policy aligned with the jurisdiction and role.

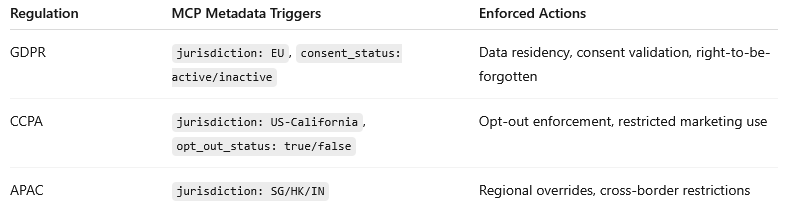

6.2 Jurisdiction-Aware Pipelines

MCP can play a crucial role in routing data through the correct jurisdiction-aware pipelines, for example:

How MCP Helps:

Every transaction or customer record carries jurisdiction and consent metadata

The fraud engine routes data through compliant workflows:

EU transactions → stored only in EU-resident data stores

California consumers who opt out → are excluded from certain analytical models

APAC jurisdictions → custom regional overrides applied

Without MCP, such jurisdictional checks must be hard-coded in each component, increasing fragility and the risk of human error. With MCP, the context travels with the data, enabling every microservice or AI agent to enforce privacy consistently.

Example

If a transaction is processed by an Agent for a Japanese customer, under APAC rules, the MCP metadata would ensure that only tokenized PII is exposed and location data is generalized at a regional level.

To summarize: In simple terms, MCP acts like a privacy gateway, which stamps every piece of data with its jurisdiction, consent, and role-based permissions. This allows:

Dynamic masking of sensitive fields

Accurate jurisdiction-based routing

Automated enforcement of privacy regulations at every step of the fraud detection pipeline

Consistent compliance without manual overrides

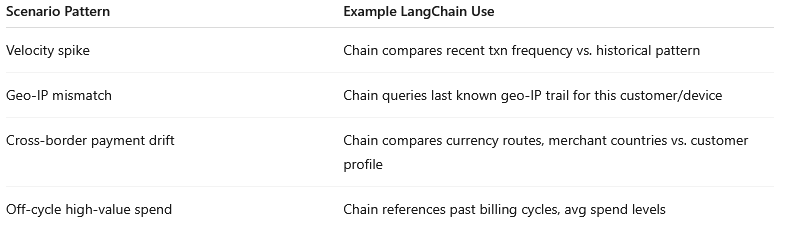

7. Real-Time Fraud Scenario Processing

One of the limitations of traditional fraud systems is that fraud rules operate in isolation—without memory or context. For example, a velocity spike or geo-IP mismatch may trigger alerts, but without understanding customer history or device behavior, these alerts generate many false positives and investigation noise.

LangChain transforms this by bringing in stateful, context-aware processing into the fraud detection pipeline.

7.1 Illustrative LangChain Scenario Chains

In this architecture, fraud patterns are not modelled as simple rules; rather, they are modeled as LangChain scenario chains:

7.2 Stateful Processing

LangChain’s memory features can enable stateful, personalized processing using a combination of Customer Memory (E.g: Location History), Device Memory (E.g: Device fingerprint) & Transaction Memory (E.g: Similar past transactions). Each fraud scenario can be implemented as a LangChain chain with steps such as:

Context retrieval from memory

Rule logic or ML scoring

Reasoning via LLM (for complex cases)

Result assembly with confidence score

This allows for more nuanced detection, for example:

Instead of “3 txns in 1 min triggers an alert,” the system detects: “3 txns in 1 min is abnormal for this customer given their 6-month pattern — hence medium risk.”

7.3 Innovative Scenarios for consideration

Voice Spoof Detection

Compares input voice to biometric voiceprint

Flags robotic/synthesized speech

IP & Language Drift

Flags login-IP mismatch from historical IP map

Detects keyboard-language mismatch (e.g., EN keyboard in RU geo)

To summarize: Langchain can bring contextual awareness to real-time processing, resulting in highly effective anamoly detection.

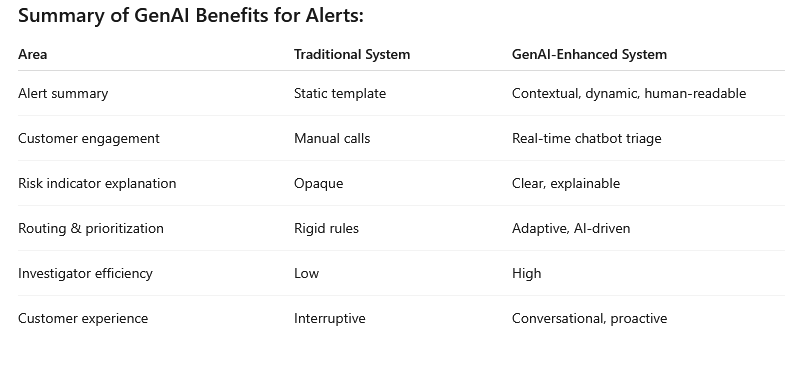

8. Alert Generation & Processing

In traditional fraud detection systems, alert generation is mostly template-driven and technical, producing rigid alerts that lack nuance or business context.

As a result:

Alerts overwhelm analysts with raw, fragmented data

Analysts must manually interpret what the alert means

Many alerts lack sufficient context for immediate action or dismissal

GenAI dramatically improves this layer by generating:

Contextual, human-readable summaries of alerts

Conversational chatbot triage with customers on flagged transactions

This reduces false positives, accelerates response times, and improves both analyst productivity and customer experience.

8.1 Structured Alert Synthesis using GenAI

Rather than hard-coded templates, the system can LangChain Prompt Templates with LLMs to create structured, rich, and human-readable alerts, which will:

Summarizes complex fraud scenarios in plain language

Extracts key risk indicators

Adds customer context (history, behavior)

Embeds recommended next steps for investigation or closure

Example: GenAI-generated alert

Alert Summary: Unusual cross-border transaction of $20,000 to Turkey, inconsistent with customer’s typical Eurozone payments.

Device used is previously unseen.

Location anomaly: login from new high-risk IP

Risk Score: 85/100

Next Action: Manual investigation recommended. Contact customer for verification.8.2 GenAI-Powered Chatbot Triage Assistant

Another potential opportunity is using GenAI chatbots as the first line of triage with customers, for example:

Illustrative Scenario:

A suspicious transaction is flagged (e.g., foreign payment, late-night spend, new device)

Before escalating to an analyst, a chatbot reaches out to the customer in real-time, as follows:

Chatbot Conversation Example

Bot:“We detected an unusual $20,000 payment to Turkey on your card ending in 1234.Did you authorize this transaction? (Yes / No / Not sure)”

Customer:“No”

Bot:“Thank you. We are freezing the transaction and escalating to our fraud team. You will not be charged.”8.3 Benefits

Alerts are instantly understandable to investigators

Reduces triage time

Helps route the alert to the right analyst (e.g., geo-risk expert)

Greatly reduces the burden of “alert fatigue”

Real-time customer verification reduces false positives

Provides audit trail of customer confirmations/denials

Resumable investigations

Rollback support

To summarize: Using LLMS, static alert generation templates can be done away with, leading to a more contextual alert messages and traige with customers

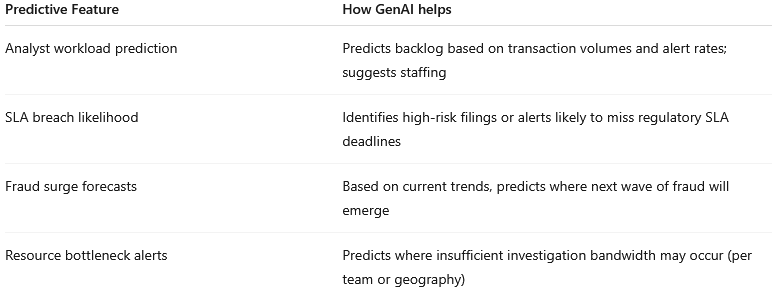

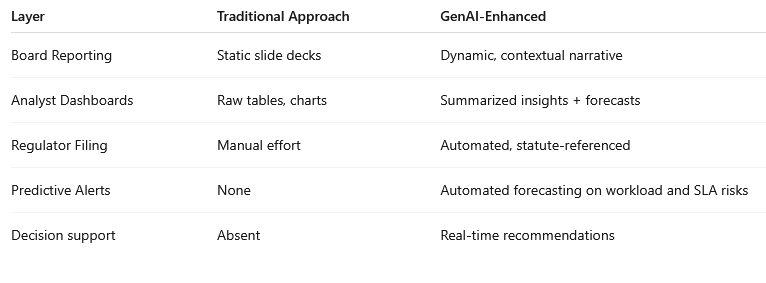

9. GenAI Reporting & Predictive Analytics

One of the least modernized layers in traditional fraud systems is reporting:

Reports are static, templated PDFs or CSVs

Different audiences (Board, Regulators, Analysts) get one-size-fits-all outputs

Reports lack narrative intelligence or actionable recommendations

There is no forward-looking intelligence: What should we expect tomorrow?

GenAI (using LLMs + LangChain + Predictive Models) transforms this layer in two ways:

Creates multi-stakeholder, context-aware reports

Adds predictive intelligence — helping leaders anticipate trends and bottlenecks

9.1 Multi-Stakeholder Reports — Personalized with GenAI

In modern architectures, LangChain Prompt Templates + LLMs dynamically generate reports tailored to each audience.

AudienceContentFormatBoardStrategic summaryPPT, PDFAnalystsInvestigation dashboardsReal-time web viewRegulatorsCompliance reportsStructured filing (XML/JSON/PDF)

9.2 Intelligent Reporting — Adding Predictive Insights

Most traditional reporting is backward-looking. GenAI + Predictive Modeling can make the system forecast and prioritize for human operators.

Example GenAI Output to Fraud Ops Manager:

“Expected alert volume surge: +23% over next 5 days — driven by suspected malware campaign in UK.

Analyst queue saturation expected by Day 3 — recommend urgent staffing allocation.”9.3 Benefits

10. Conclusion

The evolution of financial crime demands that fraud detection systems move beyond static rules and fragmented data toward a contextual, intelligent, and proactive architecture.

This article shows how combining Model Context Protocol (MCP), LangChain, and Generative AI (GenAI) addresses the shortcomings of legacy systems in key ways:

Data Privacy & Compliance: MCP brings dynamic field-level masking and jurisdiction-aware pipelines, ensuring built-in GDPR/CCPA/APAC compliance — not bolt-on privacy.

Real-Time Fraud Processing: LangChain enables fraud scenario chains that are context-aware, stateful, and capable of reasoning on customer behavior, device trails, and transaction vectors.

GenAI-Powered Alerts & Triage: Human-readable alert synthesis, intelligent prioritization, and chatbot-based triage dramatically reduce alert fatigue and increase investigation efficiency.

Predictive Reporting & Analytics: GenAI transforms reporting from backward-looking PDFs into real-time, personalized dashboards with predictive insights on analyst workload, SLA risk, and fraud trends — helping leadership stay ahead of emerging threats.

The result is an AI-native, privacy-respecting, and continuously learning fraud detection ecosystem — one that scales with business needs while meeting ever-evolving regulatory expectations.